Using schema auto-detection

Schema auto-detection

Schema auto-detection enables BigQuery to infer the schema for CSV, JSON, or Google Sheets data. Schema auto-detection is available when you load data into BigQuery and when you query an external data source.

When auto-detection is enabled, BigQuery infers the data type for

each column. BigQuery selects a random file in the data source

and scans up to the first 500 rows of data to use as a representative sample.

BigQuery then examines each field and attempts to assign a data

type to that field based on the values in the sample. If all of the rows in a

column are empty, auto-detection will default to STRING

data type for the column.

If you don't enable schema auto-detection for CSV, JSON, or Google Sheets data, then you must provide the schema manually when creating the table.

You don't need to enable schema auto-detection for Avro, Parquet, ORC, Firestore export, or Datastore export files. These file formats are self-describing, so BigQuery automatically infers the table schema from the source data. For Parquet, Avro, and Orc files, you can optionally provide an explicit schema to override the inferred schema.

You can see the detected schema for a table in the following ways:

- Use the Google Cloud console.

- Use the bq command-line tool's

bq showcommand.

When BigQuery detects schemas, it might, on rare occasions, change a field name to make it compatible with GoogleSQL syntax.

For information about data type conversions, see the following:

- Data type conversion when loading data from Datastore

- Data type conversion when loading data from Firestore

- Avro conversions

- Parquet conversions

- ORC conversions

Loading data using schema auto-detection

To enable schema auto-detection when loading data, use one of these approaches:

- In the Google Cloud console, in the Schema section, for Auto detect, check the Schema and input parameters option.

- In the bq command-line tool, use the

bq loadcommand with the--autodetectparameter.

When schema auto-detection is enabled, BigQuery makes a

best-effort attempt to automatically infer the schema for CSV and JSON files.

The auto-detection logic infers the schema field types by reading up to the

first 500 rows of data. Leading lines are skipped if the --skip_leading_rows

flag is present. The field types are based on the rows having the most fields.

Therefore, auto-detection should work as expected as long as there is at least

one row of data that has values in every column/field.

Schema auto-detection is not used with Avro files, Parquet files, ORC files, Firestore export files, or Datastore export files. When you load these files into BigQuery, the table schema is automatically retrieved from the self-describing source data.

To use schema auto-detection when you load JSON or CSV data:

Console

In the Google Cloud console, go to the BigQuery page.

In the Explorer panel, expand your project and select a dataset.

Expand the Actions option and click Open.

In the details panel, click Create table .

On the Create table page, in the Source section:

- For Create table from, select your desired source type.

In the source field, browse for the File/Cloud Storage bucket, or enter the Cloud Storage URI. Note that you cannot include multiple URIs in the Google Cloud console, but wildcards are supported. The Cloud Storage bucket must be in the same location as the dataset that contains the table you're creating.

For File format, select CSV or JSON.

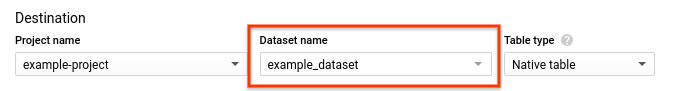

On the Create table page, in the Destination section:

For Dataset name, choose the appropriate dataset.

In the Table name field, enter the name of the table you're creating.

Verify that Table type is set to Native table.

Click Create table.

bq

Issue the bq load command with the --autodetect parameter.

(Optional) Supply the --location flag and set the value to your

location.

The following command loads a file using schema auto-detect:

bq --location=LOCATION load \ --autodetect \ --source_format=FORMAT \ DATASET.TABLE \ PATH_TO_SOURCE

Replace the following:

LOCATION: the name of your location. The--locationflag is optional. For example, if you are using BigQuery in the Tokyo region, set the flag's value toasia-northeast1. You can set a default value for the location by using the .bigqueryrc file.FORMAT: eitherNEWLINE_DELIMITED_JSONorCSV.DATASET: the dataset that contains the table into which you're loading data.TABLE: the name of the table into which you're loading data.PATH_TO_SOURCE: is the location of the CSV or JSON file.

Examples:

Enter the following command to load myfile.csv from your local

machine into a table named mytable that is stored in a dataset named

mydataset.

bq load --autodetect --source_format=CSV mydataset.mytable ./myfile.csv

Enter the following command to load myfile.json from your local

machine into a table named mytable that is stored in a dataset named

mydataset.

bq load --autodetect --source_format=NEWLINE_DELIMITED_JSON \

mydataset.mytable ./myfile.json

API

Create a

loadjob that points to the source data. For information about creating jobs, see Running BigQuery jobs programmatically. Specify your location in thelocationproperty in thejobReferencesection.Specify the data format by setting the

sourceFormatproperty. To use schema autodetection, this value must be set toNEWLINE_DELIMITED_JSONorCSV.Use the

autodetectproperty to set schema autodetection totrue.

Go

Before trying this sample, follow the Go setup instructions in the

BigQuery quickstart using

client libraries.

For more information, see the

BigQuery Go API

reference documentation.

To authenticate to BigQuery, set up Application Default Credentials.

For more information, see

Set up authentication for client libraries.

Java

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Java API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Node.js

Before trying this sample, follow the Node.js setup instructions in the

BigQuery quickstart using

client libraries.

For more information, see the

BigQuery Node.js API

reference documentation.

To authenticate to BigQuery, set up Application Default Credentials.

For more information, see

Set up authentication for client libraries.

PHP

Before trying this sample, follow the PHP setup instructions in the

BigQuery quickstart using

client libraries.

For more information, see the

BigQuery PHP API

reference documentation.

To authenticate to BigQuery, set up Application Default Credentials.

For more information, see

Set up authentication for client libraries.

Python

To enable schema auto-detection, set the

LoadJobConfig.autodetect

property to

Before trying this sample, follow the Python setup instructions in the

BigQuery quickstart using

client libraries.

For more information, see the

BigQuery Python API

reference documentation.

To authenticate to BigQuery, set up Application Default Credentials.

For more information, see

Set up authentication for client libraries.

True.

Ruby

Before trying this sample, follow the Ruby setup instructions in the

BigQuery quickstart using

client libraries.

For more information, see the

BigQuery Ruby API

reference documentation.

To authenticate to BigQuery, set up Application Default Credentials.

For more information, see

Set up authentication for client libraries.

Schema auto-detection for external data sources

Schema auto-detection can be used with CSV, JSON, and Google Sheets external data sources. When schema auto-detection is enabled, BigQuery makes a best-effort attempt to automatically infer the schema from the source data. If you don't enable schema auto-detection for these sources, then you must provide an explicit schema.

You don't need to enable schema auto-detection when you query external Avro, Parquet, ORC, Firestore export, or Datastore export files. These file formats are self-describing, so BigQuery automatically infers the table schema from the source data. For Parquet, Avro, and Orc files, you can optionally provide an explicit schema to override the inferred schema.

Using the Google Cloud console, you can enable schema auto-detection by checking the Schema and input parameters option for Auto detect.

Using the bq command-line tool, you can enable schema auto-detection when you

create a table definition file for CSV,

JSON, or Google Sheets data. When using the bq tool to create a

table definition file, pass the --autodetect flag to the mkdef command to

enable schema auto-detection, or pass the --noautodetect flag to disable

auto-detection.

When you use the --autodetect flag, the autodetect setting is set to true

in the table definition file. When you use the --noautodetect flag, the

autodetect setting is set to false. If you do not provide a schema

definition for the external data source when you create a table definition, and

you do not use the --noautodetect or --autodetect flag, the autodetect

setting defaults to true.

When you create a table definition file by using the API, set the value of the

autodetect property to true or false. Setting autodetect to true

enables auto-detection. Setting autodetect to false disables autodetect.

Auto-detection details

In addition to detecting schema details, auto-detection recognizes the following:

Compression

BigQuery recognizes gzip-compatible file compression when opening a file.

Date and time values

BigQuery detects date and time values based on the formatting of the source data.

Values in DATE columns must be in the following format: YYYY-MM-DD.

Values in TIME columns must be in the following format: HH:MM:SS[.SSSSSS]

(the fractional-second component is optional).

For TIMESTAMP columns, BigQuery detects a wide array of

timestamp formats, including, but not limited to:

YYYY-MM-DD HH:MMYYYY-MM-DD HH:MM:SSYYYY-MM-DD HH:MM:SS.SSSSSSYYYY/MM/DD HH:MM

A timestamp can also contain a UTC offset or the UTC zone designator ('Z').

Here are some examples of values that BigQuery will automatically detect as timestamp values:

- 2018-08-19 12:11

- 2018-08-19 12:11:35.22

- 2018/08/19 12:11

- 2018-08-19 07:11:35.220 -05:00

If BigQuery doesn't recognize the format, it loads the column as a string data type. In that case, you might need to preprocess the source data before loading it. For example, if you are exporting CSV data from a spreadsheet, set the date format to match one of the examples shown here. Alternatively, you can transform the data after loading it into BigQuery.

Schema auto-detection for CSV data

CSV delimiter

BigQuery detects the following delimiters:

- comma ( , )

- pipe ( | )

- tab ( \t )

CSV header

BigQuery infers headers by comparing the first row of the file with other rows in the file. If the first line contains only strings, and the other lines contain other data types, BigQuery assumes that the first row is a header row. BigQuery assigns column names based on the field names in the header row. The names might be modified to meet the naming rules for columns in BigQuery. For example, spaces will be replaced with underscores.

Otherwise, BigQuery assumes the first row is a data row, and

assigns generic column names such as string_field_1. Note that after a table

is created, the column names cannot be updated in the schema, although you can

change the names

manually

after the table is created. Another option is to provide an explicit schema

instead of using autodetect.

You might have a CSV file with a header row, where all of the data fields are

strings. In that case, BigQuery will not automatically detect that

the first row is a header. Use the --skip_leading_rows option to skip the

header row. Otherwise, the header will be imported as data. Also consider

providing an explicit schema in this case, so that you can assign column names.

CSV quoted new lines

BigQuery detects quoted new line characters within a CSV field and does not interpret the quoted new line character as a row boundary.

Schema auto-detection for JSON data

JSON nested and repeated fields

BigQuery infers nested and repeated fields in JSON files. If a

field value is a JSON object, then BigQuery loads the column as a

RECORD type. If a field value is an array, then BigQuery loads

the column as a repeated column. For an example of JSON data with nested and

repeated data, see

Loading nested and repeated JSON data.

String conversion

If you enable schema auto-detection, then BigQuery converts

strings into Boolean, numeric, or date/time types when possible. For example,

using the following JSON data, schema auto-detection converts the id field

to an INTEGER column:

{ "name":"Alice","id":"12"}

{ "name":"Bob","id":"34"}

{ "name":"Charles","id":"45"}

For more information, see Loading JSON data from Cloud Storage.

Schema auto-detection for Google Sheets

For Sheets, BigQuery auto-detects whether the first row is a header row, similar to auto-detection for CSV files. If the first line is identified as a header, BigQuery assigns column names based on the field names in the header row and skips the row. The names might be modified to meet the naming rules for columns in BigQuery. For example, spaces will be replaced with underscores.

Table security

To control access to tables in BigQuery, see Introduction to table access controls.